Imaging: DLC¶

DeepLabCut is a software package for inferring motion capture information from tracking video. Whereas Optitrack makes use of infra-red reflectors attached to the parts of the subject’s body that are to be tracked, DeepLabCut instead analyses a video of the subject using a deep neural network. The latter approach interferes less with the subject’s natural behaviour.

Schema¶

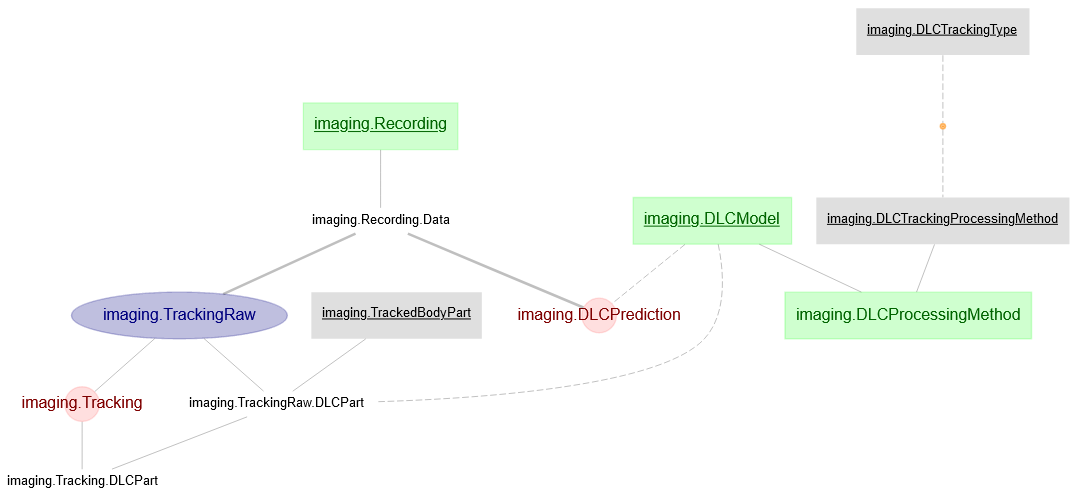

The DLC implementation in the imaging pipeline is shown below

DLC in the Imaging schema.¶

DLCTrackingTypeDetermines which kinds of recording (e.g. a 1D wheel recording vs a 2D open field recording) will be processed beyond the

TrackingRawtable. At present, only `openfield recording are supported.DLCModelContains the full specification of a trained DLC model used in prediction. Due to the way DLC is designed, this data is derived from several separate files. When creating a new Method, please pay careful attention to how body parts are named (see

TrackedBodyPart).DLCTrackingProcessingMethodDLC by itself keeps track of individual body parts, not an entire subject; while the imaging pipeline is built around the assumption that a subject can be assigned to a single state vector at any given time. This table keeps track of the method to calculate that state vector given the set of body part co-ordinates provided by DLC. Note that it has to know the exact name of each body part in question, and so if models use new names for the same body part, a new tracking processing method must be provided.

DLCProcessingMethodMaps a DLCTrackingProcessingMethod to a DLCModel. This mapping is important to ensure that the expected data columns (matching specific

TrackedBodyPart) are available (and named correctly!).RecordingDLCMaps a specific Recording to a specific Model / Processing Method.

TrackedBodyPartA list of the body parts that can be tracked. Please try to avoid adding to this list unnecessarily and follow the naming schemes already in use (for example, if the body part

left_earalready exists, please try to avoid also creatingleftear,LEFTEAR, andLeft_Ear).TrackingRaw.DLCPartStores the raw tracking data calculated via the method identifed in

DLCTrackingProcessingMethod. Raw, i.e. the position of the subject (in arbitrary units) derived from the DLC data.Tracking.DLCPartStores the processed tracking data, i.e. after conversion to real units, smoothing, and derivation of,e.g., speeds, angles etc.

DLCPredictionHandles automated processing of a session

Models and Processing Methods¶

Models¶

In the field of machine learning, a “model” is the outcome of the training stage, that may be used for inference.

In the case of DLC, this model is a weighted neural network that will be used to analyse the video file and produce a table containing position and probability data of each tracked body part at each timestamp.

The model also defines which body parts will be tracked and, importantly, what those body parts are called.

Processing Methods¶

A Processing Method handles how to convert DLC’s data output to something that the Imaging pipeline can handle.

DLC’s data output is a time-series of position data for each tracked body part. The Imaging schema is designed around the assumption of tracking a single subject, defined by a single position.

A single TrackingProcessingMethod is an algorithm to convert the positions (and probabilities) for a specific set of body parts into the position data for a single body. If a different set of body parts are chosen (i.e. in a different model), a different TrackingProcessingMethod may be required.

For example, one Model might use a camera underneath the arena, tracking the position of four feet, while a second model might use a camera underneath, tracking the position of two ears. A TrackingProcessingMethod accepting two timeseries from left_ear and right_ear cannot cope with four timeseries named left-front-foot, right-front-foot, left-back-foot, right-back-foot.

Adding new processing methods¶

Initial work with DLC within the Moser group has focused on duplicating the same tracking methods used in the past - tracking the position and angle of the head of the subject. Typically, this took the form of tracking the left and right ears, instead of LEDs mounted to the subject’s head. Consequently, exactly the same calculation method could be used, with a pre-calculation stage of identifying what data corresponds to each “LED”.

Future methods may be added, including potentially 3D information, but these methods must first be formulated, written, and tested.

DLC Models¶

In DeepLabCut model directory hierarchy, accessing the model file requires:

cfg (fields: Task | date | iteration)

shuffle (not in config)

trainingset_fraction - not in config, compute as: cfg[‘TrainingFraction’][trainingsetindex]

Minimal setting for triggering DLC analysis is as follows:

deeplabcut.analyze_videos(cfg, video_paths, shuffle, trainingsetindex)

Generated Scorer/network name

Take an example: “DLC_resnet50_mouse_openfieldJun30shuffle1_1030000”

Format:

DLC_+ netname (resnet50) + cfg[‘Task’] (mouse_openfield) + cfg[‘date’] (June30) + shuffle + trainingsiterationsNote that “trainingsiterations” here is not the same as “dlc_iteration” (which is more of a model version)

“trainingsiterations” is inferred based on: cfg[‘snapshotindex’]

This scorer name is not sufficiently unique because it misses information about the dlc_iteration

In summary, the combination of (dlc_task, dlc_date, dlc_iteration, dlc_shuffle, dlc_trainingsetindex, dlc_snapshotindex) is sufficient to uniquely specify one DLC model

Workflow¶

There are two stages to working with DLC:

Registering a model. This must currently be done via code, and is currently reserved to pipeline administrators only, to handle the naming complexity of models. Please contact Simon Ball or Horst Obenhaus to register a new model.

SessionDLC insertion. This can be done via the imaging web gui, to map a Session to a specific DLC model and processing method (see Imaging: Web GUI).

The Imaging pipeline supports automated DLC processing, assuming that the model requested is available to one or more of the workers running the pipeline. If the model is not available, the job will never complete.

Alternatively, users may run the model themselves (just as with Suite2p), although in this case, several manual steps must also be taken. A function is provided within the Imaging pipeline to run the model and do these manual steps automatically:

from dj_schemas.jobs_dlc import do_DLC_prediction

do_DLC_prediction(

video_filepaths=(r"D:/mydata/my_video_1.mp4",),

model_name="DLC_resnet50_mouse_openfieldJun30shuffle1_1030000",

tif_filepaths=(r"D:/mydata/tif1.tif", r"D:/mydata/tif2.tif"),

)

If you run DLC via the command line instead, then these manual steps are:

Open the

.picklefile and add the attributeStart Timestamp, indicating the date/time at which the video was recorded (formatted as iso8601). A notebook is provided to help with this.Name the files accordingly (see DeepLabCut for more discussion of file naming conventions)

session_directory

|- dlc_{basename}

|- dlc_config_file.yml

|- {modelname}.h5

|- {modelname}includingmetadata.pickle